Ensuring SB 576 Compliance in Streaming Ads with LM1 Loudness Metadata

1. Introduction: SB 576 and the Challenge for Streaming Services

Beginning July 1, 2026, the State of California will enforce SB 576, which prohibits a “video streaming service” from transmitting the audio of commercial advertisements louder than the video content the advertisements accompany (related blog post).

This statute builds upon the federal Commercial Advertisement Loudness Mitigation Act (CALM Act) regime, which applies to television broadcast stations, cable operators, and multichannel video programming distributors but until now excluded internet-protocol streaming.

Accordingly, streaming platforms (including those offering ad-supported tiers) that serve California consumers must ensure that any advertisement’s audio does not exceed the loudness of the program content with which it is associated. To comply, robust and automated loudness-management workflows are now essential.

2. Why LM1 is the Best Solution for SB 576 Compliance

SB 576 imposes a maximum loudness threshold on commercial advertisements in streaming environments: a video streaming service may not transmit the audio of commercial advertisements louder than the video content the advertisements accompany.

For streaming operators, the key technical challenges include:

- Ad content and program content may originate via different delivery paths or systems, leading to inconsistent loudness levels when stitched together.

- Separate production of program and ad assets may result in incomplete or inconsistent loudness metadata.

- The perceived loudness varies greatly depending on device type, application, and playback context.

- Unlike linear broadcast, streaming workflows often include dynamic ad insertion (DAI/SSAI), adaptive bitrates, device-specific decoders, and heterogeneous inventory, complicating loudness control.

By adopting LM1’s metadata-based approach, streaming services can solve all of these issues efficiently:

- The ad server generates LM1 loudness metadata for each ad and sends it as a side-chain along with the audio stream. The client compares this metadata against the program’s loudness level and adjusts dynamically in real time.

- The server no longer needs to remaster or re-encode ads, reducing operational complexity and cost.

- On the client side, loudness normalization can be achieved simply by referencing LM1 metadata—no heavy computation required.

- This ensures a consistent audio experience across all devices and platforms.

- And since LM1 is standardized in ANSI/CTA-2075.1, it’s already a trusted and validated solution for OTT/video streaming loudness management.

In short, LM1 simultaneously ensures SB 576 compliance, enhances user experience, and simplifies operations—a proven, standards-based solution ready for deployment.

3. Technical Background: What is LM1?

LM1 is a metadata-driven loudness normalization technology developed by Gaudio Lab, engineered to maintain consistent audio loudness across program and advertisement assets in broadcast, streaming, and on-demand environments.

Key Features

1. Standardized Technology

- Established as the standard TTAK.KO-07.0146 (2020) by the Telecommunications Technology Association of Korea.

- Incorporated into the standard ANSI/CTA-2075.1-2022: Loudness Standard for Over-the-Top Television and Online Video Distribution for Mobile and Fixed Devices – LM1

2. Side-chain Metadata Structure

- Generates very compact metadata (typically < 1 KB per asset) that runs as a side-chain alongside the audio stream.

- Allows the original audio to remain untouched—no alteration of program/ad audio is required.

- Enables real-time normalization in the client environment with minimal latency.

3. Accuracy & Performance

- Uses the ITU-R BS.1770 standards (up to BS.1770-5) for loudness measurement.

- Zero-latency processing (with a Peak Limiter delay typically ≤ 0.001 sec when applied).

- Supports advanced modes such as Dialogue (Anchor) Normalization, Transparent Mode, and Quality Secure Mode.

- Ultra-low CPU load, optimized for both server and client environments.

- Supports all device and application platforms: mobile, smart TV, web, automotive, and embedded systems.

- Custom integration available for diverse workflows and applications.

- Proven reliability—used daily by over 50 million end users worldwide.

4. How It Works

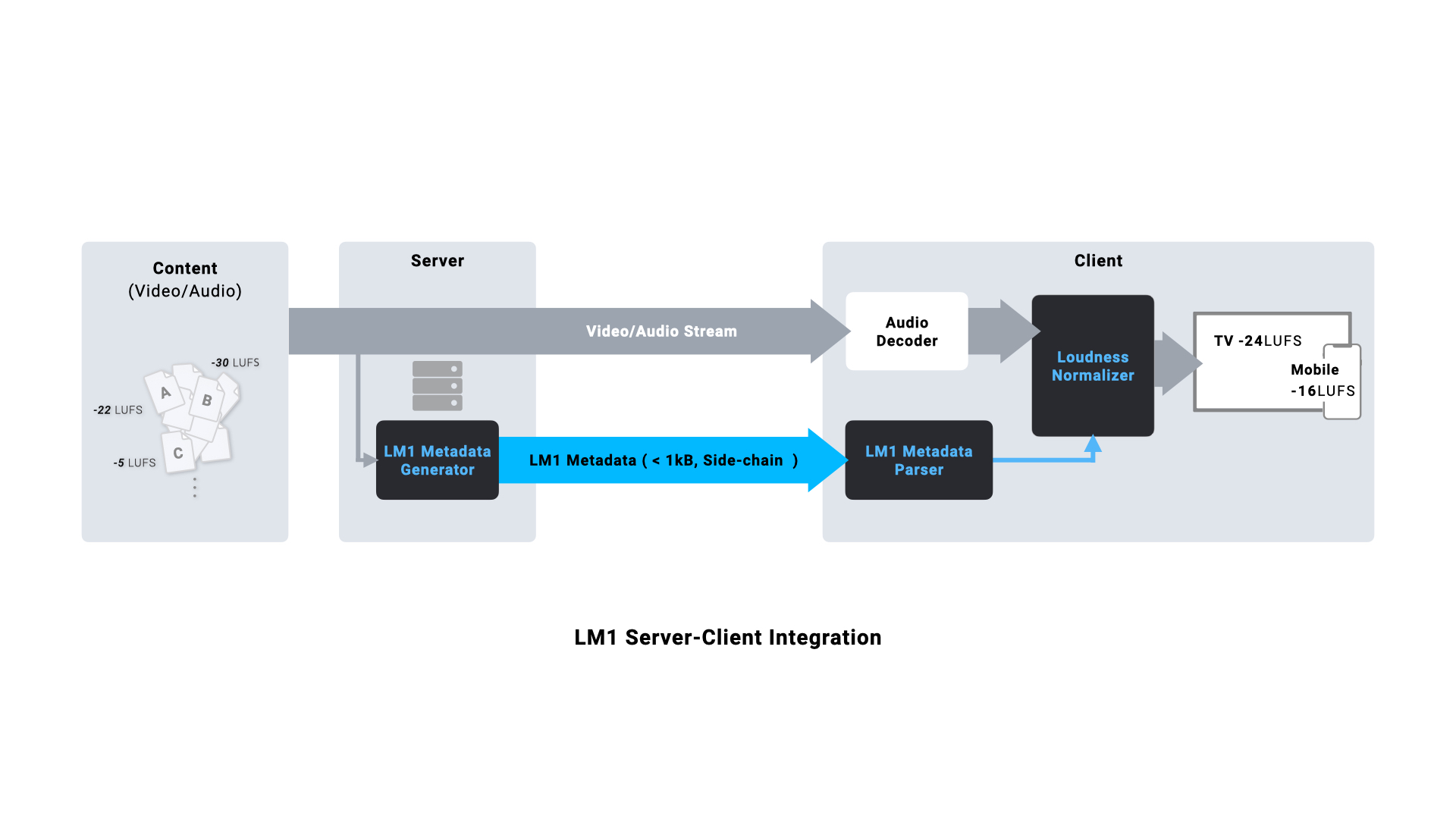

LM1 measures the loudness of each audio/video asset on the server side and generates side-chain metadata (LM1 Metadata). During playback, the client parses this metadata and applies real-time loudness normalization.

[Figure 1. LM1 Server–Client Architecture]

Metadata Generation

-

The LM1 Metadata Generator measures and analyzes each content’s loudness level (ITU-R BS.1770 based).

-

Generates a < 1 KB LM1 metadata file per content item.

Transmission (Side-chain)

-

The LM1 metadata is transmitted to the client as a separate side-chain alongside the audio stream. It can be embedded using ID3 tags or Timed Metadata, depending on the delivery protocol — for example, via the EXT-X-ID3 tag in HLS streams or the emsg (Event Message Box) in DASH streams.

Client Processing

-

The client decodes audio normally while the LM1 parser feeds data to the Loudness Normalizer.

-

Output targets are adjusted per device (e.g., TV = −24 LUFS, Mobile = −16 LUFS), ensuring a consistent listening experience across platforms.

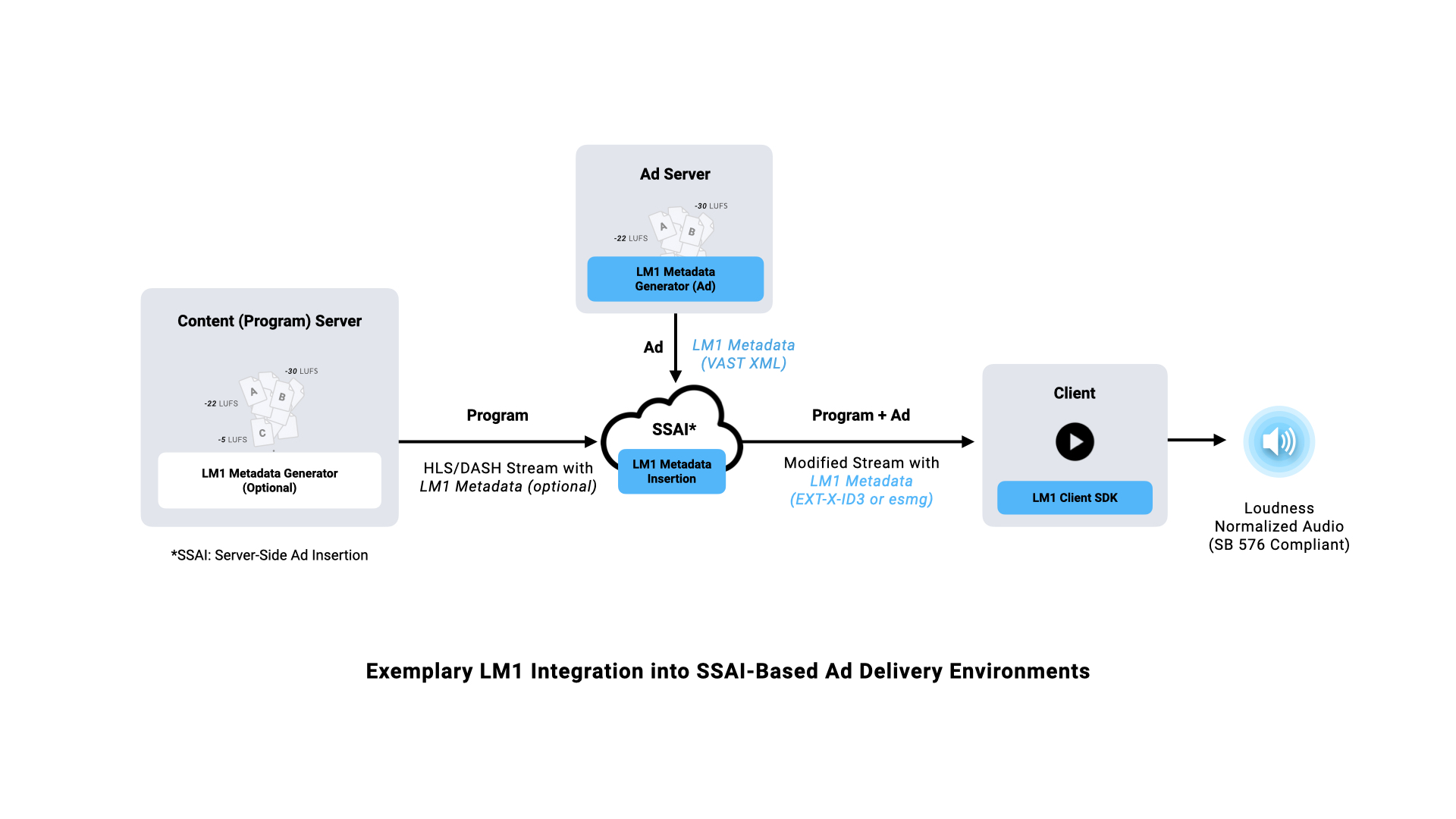

The following diagram illustrates how LM1 can be integrated into a Server-Side Ad Insertion (SSAI) workflow to comply with SB 576. (The same concept also applies to client-side ad insertion (CSAI).)

[Figure 2. LM1 in an SSAI Environment]

Content Server (Program) - optional

-

Measures the loudness of the program audio and generates the corresponding LM1 metadata.

-

The metadata is then embedded into the audio stream (HLS or DASH) as Timed Metadata.

-

For services that have already normalized the program audio to a specific target loudness level, this process may be omitted.

Ad Server

-

Generates LM1 metadata for each ad track and transmits it to the SSAI server via a VAST Extension or Sidecar Metadata format.

-

The ad server analyzes the loudness of each ad’s audio and generates the corresponding LM1 metadata.

-

When delivering the ad content, it provides the SSAI server with a VAST XML that includes the LM1 metadata in the Extension field, together with the existing ad metadata such as URL, ID, duration, and tracking information.

SSAI Server (Ad Assembler)

-

The SSAI server combines the program and ad streams into a single continuous playback stream.

-

Within this process, the Manifest Stitcher aligns program and ad segments based on the HLS or DASH manifest, converts the LM1 metadata received from the Ad Server into Timed Metadata (e.g., EXT-X-ID3 for HLS or emsg for DASH), and inserts it into the manifest at each ad insertion point.

Client (Player + LM1 SDK)

-

The client uses the LM1 Client SDK to read the LM1 metadata of both advertisements and, optionally, the program content.

-

The SDK analyzes this metadata in real time and automatically adjusts the loudness to maintain a consistent audio level across all playback segments.

This architecture enables SB 576-compliant loudness control even in dynamic ad insertion environments. Because LM1 metadata is codec-agnostic, it integrates seamlessly into existing SSAI pipelines without requiring infrastructure changes.

5. Why Side-chain Metadata Matters

-

Sends reference information only—no re-encoding or re-mastering needed for the video/audio asset.

-

Existing infrastructure requires minimal modification—metadata generation is lightweight and compatible.

-

Program and ad metadata are maintained separately, supporting independent workflows and broad vendor interoperability. This also enables a single ad asset to be used consistently across multiple content platforms that each define different target loudness levels, without the need for re-encoding or creating separate ad versions.

-

Codec-agnostic: operates seamlessly across AAC, AC-3, and other audio formats — even when multiple codecs coexist within the same program or advertising stream.

-

Metadata is generated directly from measured content, ensuring 100% trustworthy data.

-

Already deployed across multiple device/app platforms, it is highly scalable and production-ready.

6. Wrap-Up

While SB 576 is a consumer-friendly regulation that improves viewer experience, it also introduces new technical challenges for streaming providers.

In fact, the Motion Picture Association and the Streaming Innovation Alliance, representing major platforms like Netflix and Disney, have already expressed concerns about the complexity of implementing the law in practice.

LM1 was specifically designed to address these industry-wide issues. When the standard was first defined in 2020, it was built to solve the structural problem of inconsistent loudness across broadcast and OTT ecosystems.

If you’re interested in learning more about how LM1 can help your platform prepare for SB 576, please contact us through the Contact Us link.