Gaudio Lab SDK Team, Bridging Audio AI and Products

🎧This interview shares a behind-the-scenes look at Gaudio Lab’s GDK (Gaudio SDK) Development Team—their working style, technical challenges, team culture, and journey of growth. The GDK Development team tackles the challenge of addressing diverse client needs with a single SDK, while actively experimenting with AI Agents to enhance development efficiency. In an environment where autonomy meets accountability and where learning is continuous, the team is constantly evolving.

If you’re curious about life on the GDK Development Team or thinking of joining Gaudio Lab, don’t miss this story! 😉

🧩 What does the SDK Develpment Team do?

Before we dive into the details, let’s briefly go over what an SDK is:)

SDK stands for Software Development Kit. It’s essentially a set of software tools that helps developers implement specific features more easily. For example, if you want to add a certain function to an app—like login, payment, or audio processing—you don’t have to build everything from scratch. Instead, you can use the tools provided in the SDK to build it faster and more efficiently.

Bridging audio AI technology with real products

Q: Please introduce yourself and your role on the GDK Development Team.

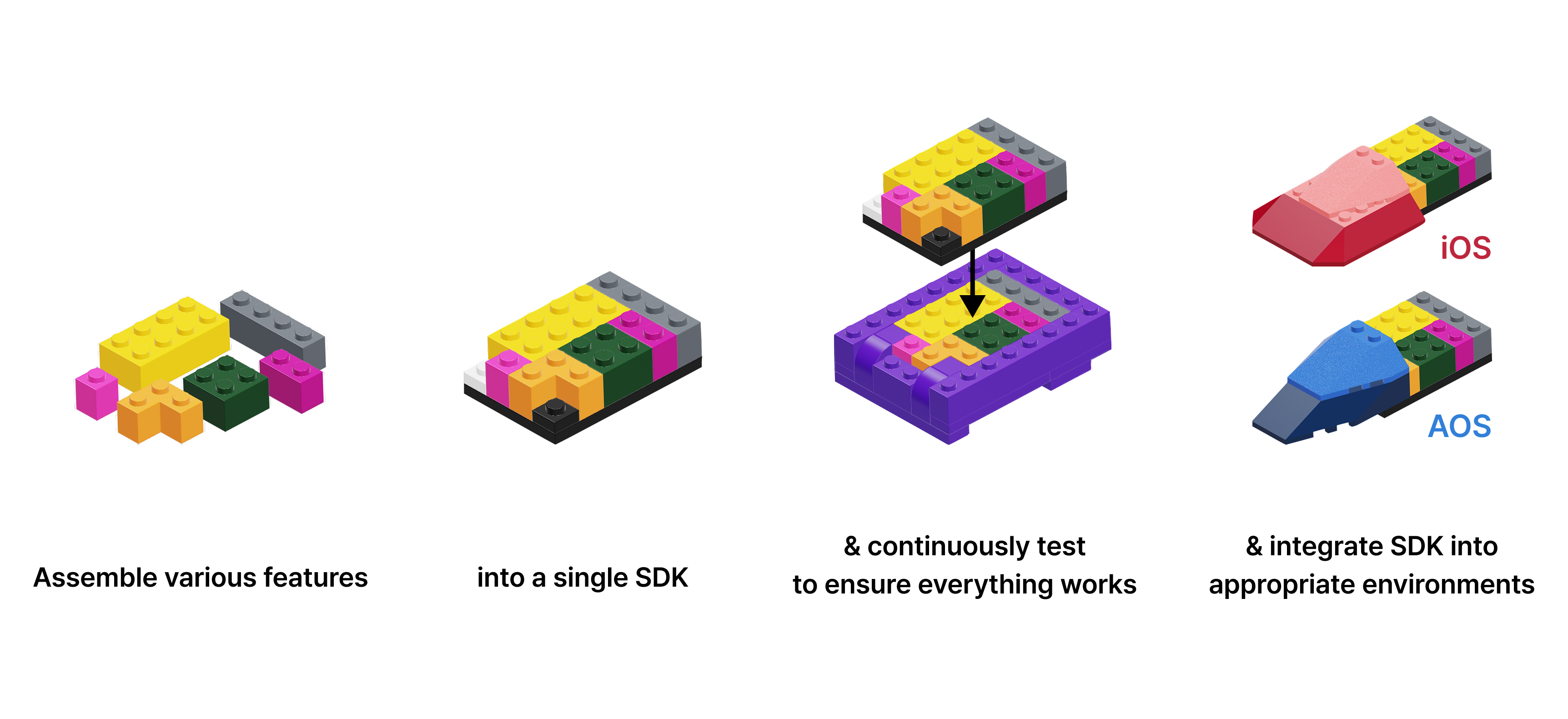

Leo: We’re the SDK developers in the GDK squad. Simply put, we’re the key link that ensures our audio AI technology works seamlessly in real products. We define use cases, consider which technologies are suitable for which products, and identify the hardware (chipsets) and environments they’ll run on.

We then integrate the SDK into those environments, conduct continuous testing, and respond quickly when issues arise. Our ultimate goal is to deliver a stable, flexible SDK that clients can use independently.

In this process, I primarily focus on porting the SDK to real-world device environments. This involves identifying how and where to integrate the SDK for each system, and ensuring that it runs reliably within the constraints of each platform.

William: While Leo mainly handles hardware porting, I primarily work on the mobile side and also take on DevOps responsibilities. I develop internal tools for build and deployment, and manage frameworks that support the entire development pipeline. I also serve as the first point of contact for Gaudio SDK products that are in the stabilization and maintenance phase, receiving issues from client companies, identifying the problems, and working to resolve them.

Our team isn’t rigidly divided by roles. While we each have our primary responsibilities, we help each other flexibly depending on the situation.

Q: Gaudio Lab has a number of products. Which products have been developed with your team’s involvement?

William: Just to name a few, LM1 (loudness normalization tech), GSA (a spatial audio solution), and Just Voice (an AI noise reduction solution) all involved the GDK team. Other products include ELEQ (Loudness EQ), Smart EQ, Binaural Speaker (3D audio renderer), and GFX (sound effects library). We’ve had a hand in all of them.

How we joined this dynamic team

Q: It sounds like a great environment to gain diverse experience. What led you to join the GDK Development Team in Gaudio Lab?

William: Honestly, when I first joined, I didn’t have much knowledge—or even interest—in audio (laughs). I started as an intern, and my first task was fixing a bug in a WAV parser. That led me to explore the metadata structure of WAV files, and I was fascinated to learn how analog signals are represented digitally. It felt like the same excitement I had when I first discovered computer science. That curiosity kept growing, and now I’ve been here for five years.

Leo: I was already familiar with Gaudio Lab through people I knew, so I naturally had an interest. In my previous company, I wanted to try backend development, but ended up mainly working on device-side tasks. I wanted to find work that was both engaging and something I could excel at.

The idea of SDKs—tools built by developers for developers—really intrigued me. Plus, the opportunity to develop across various device platforms was a big draw. Those two reasons alone made me want to take on the challenge, which led me to join Gaudio Lab.

Growing through diverse projects

Q: In what ways have you developed professionally since joining Gaudio Lab?

William: Working on the GDK Development Team has exposed me to a wide range of tasks and technologies. Over the past five years, I’ve worked on nearly 10 projects, across various programming languages and environments. I think of it as a journey from sand to stone—you don’t become solid with a single splash of water. Repeated exposure to projects helps you harden and grow. It’s been tough, but I’ve gained a lot from it.

Some might ask, “Can you go deep if you’re doing so many different things?” But in our team, staying shallow isn’t an option. Platform integration requires deep enough understanding to communicate effectively with clients. Working across so many different environments has helped me develop not just technical skills, but also a broader perspective and deeper insights—things that are often hard to gain in the early stages of a career.

Leo: Compared to previous companies I’ve worked for, Gaudio Lab is much freer and more flexible. The autonomous working style and active communication among team members gave me the impression that the company is “alive.” I’ve had many chances to apply my experience freely and, even as a senior hire, I’ve found plenty of opportunities to keep learning and challenging myself. Thanks to a culture that embraces experimentation, I’ve been exposed to a variety of technologies and environments—and recently, I’ve started exploring AI-related work as well.

Curious about the GDK Development Team’s work with AI?

STAY TUNED for Part 2 of our interview!