How Does AI Find Similar Music? Understanding the Criteria Behind the Match

In our previous post, we explored the story behind the creation of Gaudio Music Replacement. This time, we’ll dive into how AI determines whether two pieces of music are "similar"—not just in theory, but in practice.

When broadcasters or content creators export their content internationally, they must navigate complex music licensing issues, which vary by country. To avoid these legal hurdles, replacing the original soundtrack with copyright-cleared alternatives has become a common workaround.

Traditionally, this replacement process was manual—listening to tracks and selecting substitutes by ear. However, because human judgment is subjective, results vary widely depending on the individual’s taste and musical experience. Consistency has always been a challenge.

To address this, Gaudio Lab developed Music Replacement, an AI-powered solution that finds replacement music based on clear and consistent criteria.

Let’s explore how it works.

Finding Music That Feels the Same

How Humans Search for Similar Music

When we try to find music that sounds similar to another, we subconsciously evaluate multiple elements: mood, instrumentation, rhythm, tempo, and more. But these judgments can differ from one day to the next, and vary depending on our familiarity with a genre.

Moreover, what we consider "similar" depends heavily on what we focus on. One person may prioritize melody, while another emphasizes instrumentation. This subjectivity makes it difficult to search systematically.

Can AI Understand Music Like We Do?

Surprisingly, yes—AI models are designed to mimic how humans perceive music similarity.

Multiple academic studies have explored this topic. In this post, we focus on one of the foundational models behind Gaudio Music Replacement: the Music Tagging Transformer (MTT), developed by a Gaudin, Keunwoo.

The core concept behind MTT is music embedding. This refers to converting the characteristics of a song into a numerical vector—essentially, a piece of data that represents the song’s identity. If we describe a track as “bright and upbeat,” an AI can interpret those traits as vector values based on rhythm, tone, instrumentation, and more.

These vectors act like musical DNAs, allowing the system to compare a new song against millions of candidates in the database and return the most similar options—quickly and consistently.

MTT plays a key role in generating these embeddings, automatically tagging a song's genre, mood, and instrumentation, and transforming them into vector representations the AI can process.

Replacing Songs with AI: Matching Similar Tracks

Music Embedding vs. Audio Fingerprint

There are two main technologies AI uses to analyze music: music embedding and audio fingerprint. While both convert audio into numerical form, their goals differ.

-

Audio fingerprint is designed to uniquely identify a specific track—even in cases where it has been slightly altered. It’s great for detecting duplicates or copyright infringements.

-

Music embedding, on the other hand, captures a song’s style and feel, making it ideal for identifying similar—but not identical—tracks.

When it comes to music replacement, embedding is far more useful than fingerprint. AI needs to recommend tracks that evoke a similar emotional or sonic atmosphere, not just technical matches.

How AI Searches for Similar Tracks

Music Replacement uses music embeddings to search and replace music in a highly structured way.

First, it builds a database of copyright-cleared music. Each song is divided into segments of appropriate length. The AI then pre-processes each segment to generate and store its embedding vector.

When a user uploads a song they want to replace, the AI calculates that song’s embedding and compares it to all stored vectors in the database. It uses a mathematical metric called Euclidean distance to measure similarity. The smaller the distance, the more similar the tracks.

But it doesn’t stop there. The AI also takes into account genre, tempo, instrumentation, and other musical properties. Users can even prioritize specific elements—like asking the AI to find replacements that match tempo above all else.

Gaudio Music Replacement also supports advanced filtering, allowing users to fine-tune their search results to fit exact needs.

The Devil Is in the Details: From Model to Product

While music embeddings provide a strong technical foundation, deploying this system in real-world environments revealed a new set of challenges. Let's explore a few of them.

Segment Selection

Choosing which part of a song to analyze can impact results just as much as choosing which song to use.

If we divide every track into uniform chunks-say, 30 seconds each-we might cut across bar lines, break musical phrases, or miss important transitions, resulting in poor matches.

Music is typically structured into intros, verses, choruses, and bridges. These sections often carry distinct emotional tones. By analyzing the song’s internal structure and aligning segments accordingly, we improve both matching accuracy and musical coherence.

Volume Dynamics: The Envelope Problem

In video content, background music often changes dynamically depending on what’s happening in the scene. For example, during dialogue, the music volume might fade low, and then rise during an action sequence.

These dynamic shifts are represented by the envelope—a term for how a sound’s volume or intensity changes over time. If AI ignores the envelope when replacing music, the result can feel awkward or unnatural.

Ideally, the AI finds a replacement track with a similar envelope. If that’s not possible, it can learn the original envelope and apply it to the new track—preserving the intended emotional flow.

Mixing & Mastering

Finding the right song is only half the battle. For a replacement to feel seamless, the new track must blend naturally with existing dialogue and sound effects.

While AI can find musically similar tracks, determining whether the new audio actually fits the original mood, tone, and mix often requires human expertise. In fact, professionals say they spend as much time mixing and mastering as they do selecting the right music.

To address this, Gaudio Lab turned to WAVELAB, its own subsidiary and one of Korea’s leading film sound studios. With years of experience in cinema and broadcast sound design, WAVELAB contributed its expertise to develop a professional-grade AI mixing and mastering engine. This engine goes beyond simple volume adjustment, capturing the director’s intent and applying it to the new track with nuance and precision.

Coming Up Next: Where Does the Music Begin—and End?

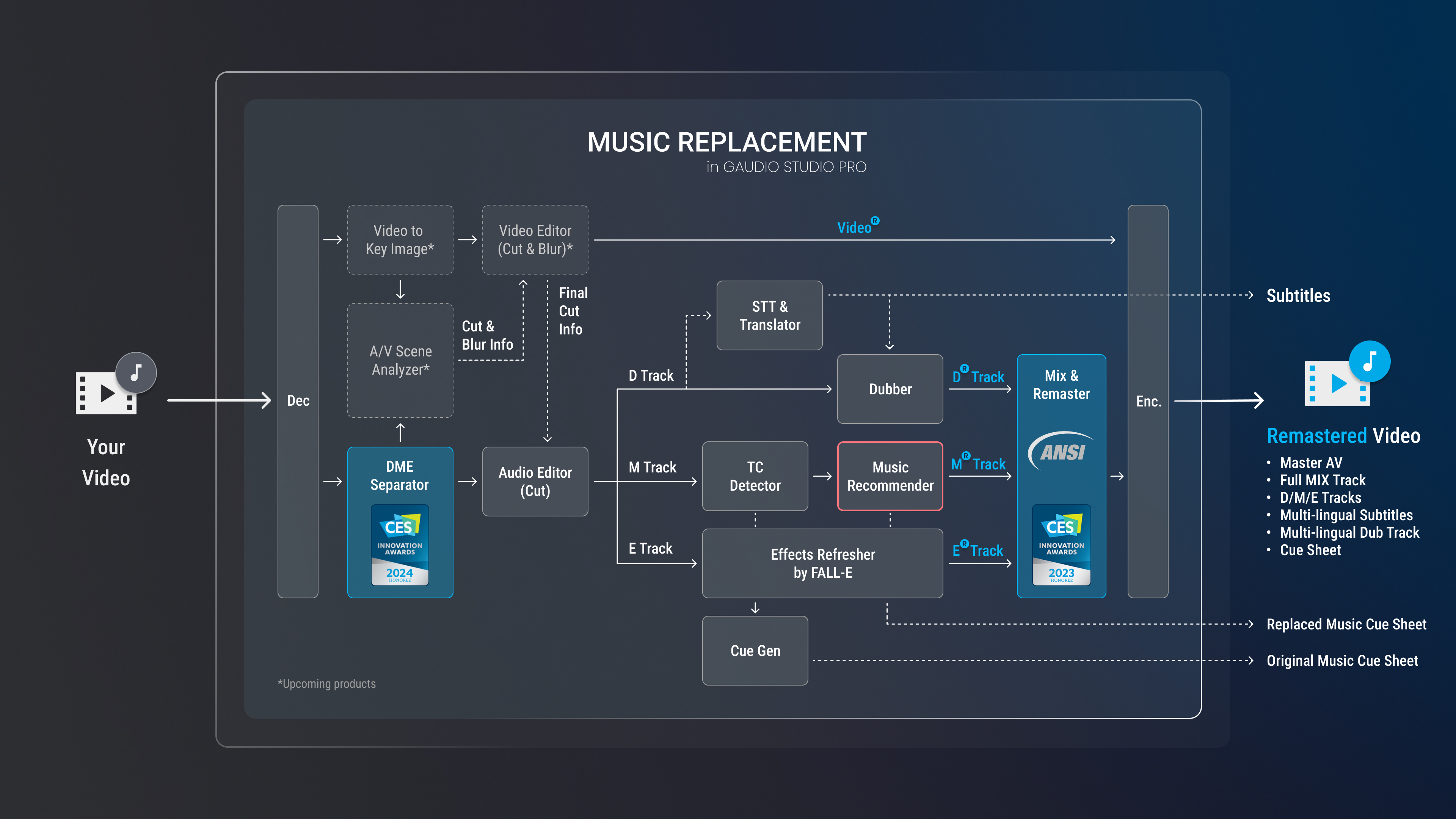

The image above shows Gaudio’s Content Localization end-to-end system diagram, including Gaudio Music Replacement. In this post, we focused on Music Recommender, which takes a defined music segment and swaps it with a similar one.

But in real-world content, the first challenge isn’t always about which song to replace—it’s figuring out where the music even is.

In many videos, music, dialogue, and sound effects are mixed into a single audio track. Before we can replace anything, we need to separate those elements.

But here’s a question: in a movie scene, is a cellphone ringtone considered music—or a sound effect? And what if multiple songs are joined together with fade-ins and fade-outs? The AI must then detect where one track ends and another begins, by accurately identifying timecodes.

In the next article, we’ll explore two powerful technologies Gaudio Lab developed to solve these problems:

-

DME Separator: separates dialogue, music, and effects from a master audio track

-

TC(Time Code) Detector: identifies precise start and end points for music segments

Stay tuned—we’ll dive deeper into how AI learns to define the boundaries of music itself.