AI Music Replacement: Solve Music Copyright Issues & Go Global with Gaudio Lab

Case 1.

The Netflix documentary <Dear Jinri>, which delves into the untold story of the celebrity Sulli, encountered a critical issue during its production. The film aimed to incorporate a self-recorded video of Sulli, left on her phone, as a key element in the end credits. However, the video, much like a personal diary, also captured the background music La Vie en Rose by Edith Piaf. Due to unresolved copyright issues for the song, the filmmakers were unable to use this deeply emotional scene.

Case 2.

A popular South Korean variety show was exported to Taiwan, where it became a major success. However, complications arose when the music used in the show couldn't be cleared for copyright in Taiwan, forcing the production company to pay substantial royalties. This unexpected cost ended up surpassing the revenue earned from the show's export, creating a net financial loss.

Case 3.

A well-known vlogger encountered problems while trying to upload a video of their live experience at a soccer match. The stadium’s background music included copyrighted songs, which triggered YouTube’s Content ID system for copyright infringement. As a result, the video could not be uploaded as planned.

These cases highlight real-life inquiries received by Gaudio Lab, a company dedicated to solving diverse audio challenges.

Whether for individual creators like YouTubers or professional broadcasters, producing video content often requires dealing with unexpected situations where music must be removed or replaced. And these examples are just the beginning.

Let’s explore how Gaudio Lab resolved these music copyright challenges.

(Photo = Still from Dear Jinri)

Why Replace Music?

The most common reason is to address music copyright issues.

Broadcast networks typically pay copyright fees for music used during the initial airing of a program. To elaborate, most networks pay a fixed fee to music copyright management organizations, which grants them unlimited rights to use songs managed by the organization—but only for broadcasts on their own channels.

However, when the same content is distributed to platforms like Netflix or FAST (Free Ad-Supported Streaming TV) channels, additional music licensing must be secured in each country where the content is streamed. This can incur substantial costs. Even if the content is already complete and ready to sell, licensing fees can easily exceed the revenue potential.

To navigate such copyright hurdles, creators have historically relied on the following options:

- (1) Abandon exporting the content.

- (2) Remove the affected portions entirely (impossible for variety shows with music throughout).

- (3) Replace the music with copyright-free alternatives (a process called "video re-editing").

For option (3), the process has been entirely manual: editors use basic audio tools to isolate the music, search for similar alternatives in limited databases, and seamlessly reinsert the replacements into the original video. This painstakingly labor-intensive process often takes two to three weeks to edit a single 60-minute video.

Individual creators like YouTubers face similar obstacles. When dealing with videos containing copyrighted music, they often (1) abandon uploading the video, (2) edit out the affected portions entirely, (3) (recently) use music separation technology to remove the music while retaining other audio elements.

Music copyright is so crucial for platforms like YouTube that their Content ID management system detects copyrighted music in all uploads and suggests these same three options.

Gaudio Lab's Music Replacement: A Revolutionary AI Solution for Copyright Issues

Gaudio Lab’s latest AI-powered video audio editing solution, Gaudio Music Replacement, revolutionizes how these challenges are addressed.

How does it differ from traditional manual methods?

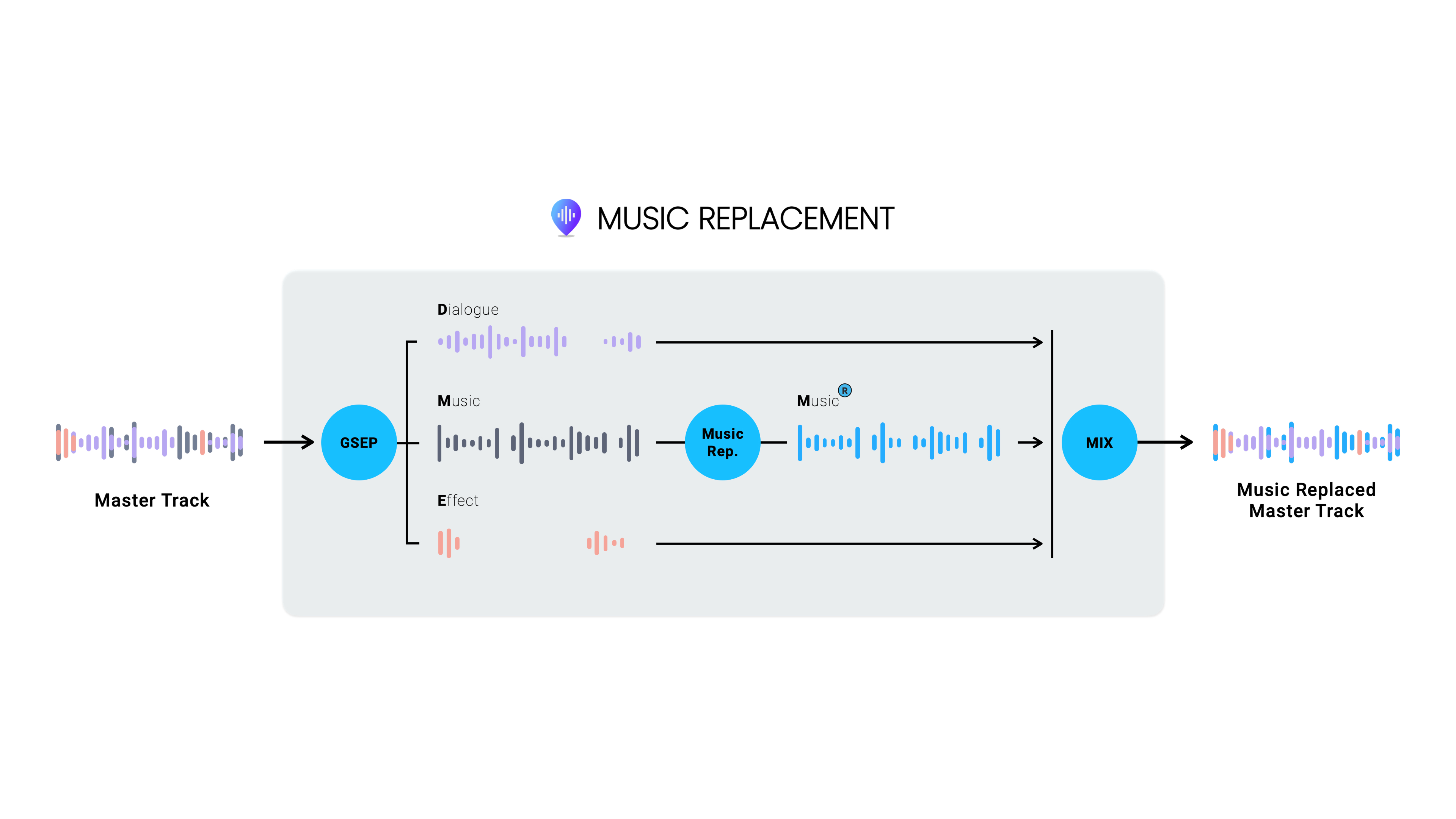

First, users upload their video to Music Replacement, where the AI separates the audio tracks into dialogue, music, and effects (commonly referred to as DME). Gaudio Lab’s audio separation technology, GSEP, is renowned for its exceptional quality. It has been widely recognized as the best-in-class audio separation technology. At CES 2024, Gaudio Lab even won a CES Innovation Award for its product, Just Voice, which enables real-time voice isolation.

Next, the separated music track is analyzed by AI, which identifies individual pieces of music and divides them into segments. The system then uses a music recommendation engine to search its extensive music database for similar tracks. This database contains tens of thousands of songs across various genres, all cleared for global use. Unlike low-quality AI-generated music, these tracks are created and uploaded by professional artists worldwide, ensuring top-notch quality.

Finally, the AI seamlessly re-mixes the replaced music track with the original dialogue and effects tracks to produce the final edited video.

With Music Replacement, creators no longer need to (1) abandon their content, (2) cut out affected portions (3) or spend weeks and substantial resources on manual re-editing. Instead, they simply upload their video to Music Replacement, wait briefly, and… Boom! They receive a video where the original musical intent is preserved, but all copyright issues are resolved. (Blind tests conducted with professionals found that many couldn’t distinguish the edited version from the original!)

The clients mentioned earlier have already adopted Music Replacement as a core solution. Exporting content internationally involves more than resolving music copyright issues—it often requires broader content localization efforts. Clients naturally request additional services like dubbing, subtitles, cue sheet generation, and video editing.

“I want to handle everything within Gaudio!”

As demand grows, Gaudio Lab’s research and product teams get busy adding new features, enhancing both convenience and performance. The name Music Replacement no longer fully captures the product’s capabilities, leading the product owner to contemplate a new name. 😅

Most of Music Replacement’s features are powered by AI. In our next post, we’ll dive deeper into the technical details of each process and showcase the convenient tools in the Gaudio Music Replacement Editor. Stay tuned!