Gaudio Lab's Golden Ears Introduce - Spatial Audio, Try Listening Like This

Gaudio Lab's Golden Ears Introduce

Spatial Audio, Try Listening Like This

Listening to sound is quite a personal experience. Everyone has their own tastes when it comes to music. Personally, I really enjoy listening to spatially rich sounds in a concert hall, as I can feel the heat and energy from my favorite artists right in front of me through the sound.

With 'Spatial Audio' technology gaining global attention, anyone with a Spatial Audio-supported smartphone and earbuds can now easily experience sound as if they were at a concert, without the need for multiple speakers or expensive audio equipment.

Due to this convenience, interest in Spatial Audio is growing rapidly! To help you enjoy Spatial Audio even more, we would like to introduce some tips from), pioneers in Spatial Audio technology.

→ If you want to know more about Spatial Audio, read this article too.

If someone asks about Korea's 'Sound Craftsmen,' everyone thinks of Gaudio Lab

First, let us introduce Gaudio Lab. The company prides itself on having the highest concentration of audio lovers worldwide. As such, it has a unique team of members. Among them are, who have either innate talent or have honed their skills in the industry for a long time, distinguishing good sounds. Gaudio Lab's technologies must pass through the hands and ears of these audio craftsmen before they can see the light of day. We have invited some of these Golden Ears, who have a high level of understanding and expertise in Spatial Audio, to share valuable tips with you!

Although the sound experience is highly personal, we hope that their advice serves as an opportunity to rediscover your preferences!

Introducing Gaudio Lab's Leading Golden Ears

James

James holds a Ph.D. in acoustical engineering. Since childhood, he has enjoyed listening to and playing music. He especially loves playing and listening to the piano. He is a fan of classical and jazz genres and listens to many old songs. Lately, he has been immersed in Claudio Arrau's piano performances.

Jayden

Jayden also holds a Ph.D. in acoustical engineering. He entered the world of music through the influence of roommates he lived with. He is a romantic who enjoys spending quiet nights with ballads. His dream is to play the guitar with his child once they grow a little older.

Bright

Bright is a sound engineer. He developed a dream of becoming a sound engineer after listening to his cousin's MP3 player. He enjoys ballads accompanied by orchestras. He is a space enthusiast who loves the universe and is also very interested in computer assembly.

1. These days, there are many Spatial Audio technologies on the market that are gaining a lot of attention. Have you experienced any well-implemented or impressive Spatial Audio?

Jayden : Personally, I think that among the Spatial Audio solutions currently available, the AirPods Pro's Spatial Audio is highly refined (not the AirPods Max). I believe other solutions still have areas that need improvement.

To give a sense of to sound, it's important to provide listeners with a sense of 'direction,' making them aware of where the sound is coming from. To do this, you need to apply something called 'binaural cues' to the sound. Simply put, when sound enters the ears, the difference in position (distance) between the two ears causes the sound to be heard differently in the left and right ears. Binaural cues are a reflection of this.

When binaural cues are applied, the high-frequency range is relatively emphasized, resulting in a change in timbre. It is technically not easy to give an accurate sense of direction, such as up or down, to the sound (even if you accept that). I think the AirPods Pro's Spatial Audio is well-tuned to minimize timbre distortion while providing a sense of direction to the sound.

Another advantage is that you can enjoy comfortable sounds regardless of the content being played. Thanks to Apple's unique, non-stimulating, and mild timbre, your ears won't get tired even after listening for a long time.

Bright : Although there are differences in each mix engineer's additional mixing, I also felt that Apple's Spatial Audio provides the most seamless and spatial rendering compared to existing stereo sound sources. When going through all Spatial Audio renderings, the bass sensation tends to disappear quite a bit, but among them, I would say it's still the most natural.

James : Excluding Gaudio Lab's Spatial Audio technology GSA, the Spatial Audio I've listened to in-depth is Apple's Spatial Audio. However, I haven't yet found a Spatial Audio that I think is good in every aspect.

I believe that each Spatial Audio has its own unique characteristics, strengths, and weaknesses. So, rather than determining an absolute hierarchy, various perspectives should be considered for evaluation. In that regard, I think there is still a long way to go for Spatial Audio, and the areas for improvement are endless. That means Spatial Audio will continue to evolve even more in the future! :)

2. Do you have any criteria for determining good Spatial Audio, or any personal tips for listening to Spatial Audio well?

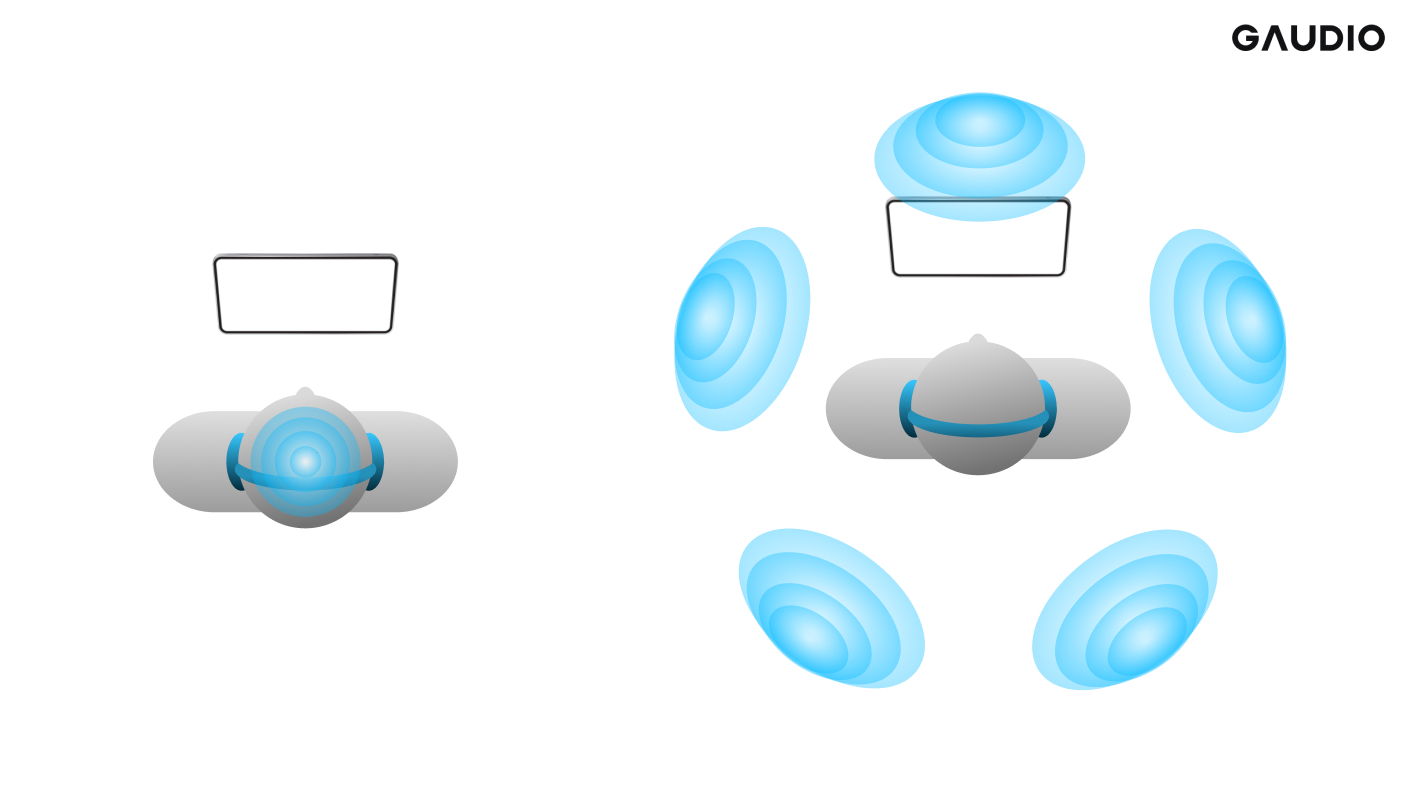

Jayden : When listening to Spatial Audio, I think it's good to assess how well the 'externalization effect' is implemented. As the term 'Spatial Audio' implies, the sound coming from headphones or earphones should create a space that feels like it's coming from outside. ("External" means existing outside!) If we were to visually represent externalization, you can think of it as a spherical sound space that forms outside, centered around your head.

If you observe carefully when listening to music without applying Spatial Audio, you can feel that the sound image is concentrated in the center of your head. However, when you think about it, all the sounds we hear in our daily lives actually occur in the space outside our heads (outside our ears). Wearing earphones and suddenly hearing the sound inside your head creates an unnatural situation. The technology that makes it sound as if it's coming from outside your head, rather than inside, when you wear earphones and listen to music, is externalization. It's only natural that the better the externalization technology, the more natural the sound you can hear, even with earphones, as if you were actually listening to it in reality.

What would it be like to experience the music exactly as the artist intended? In fact, without Spatial Audio, you can't help but often feel the phenomenon of sounds blending together, with louder sounds overpowering quieter ones. However, when Spatial Audio is applied and the direction of each sound source is widely dispersed in the 3D space outside your head, the sound comes to life in every corner, making the audio richer, more three-dimensional, and ultimately closer to the original intention.

Figure 1. Comparison between no externalization effect (left) and its application (right)

James : I also agree that the essence of Spatial Audio is externalization. Instead of sounds that feel forced into your ears, it's important to pay close attention to how the instruments that make up the song naturally disperse in space and how they sound.

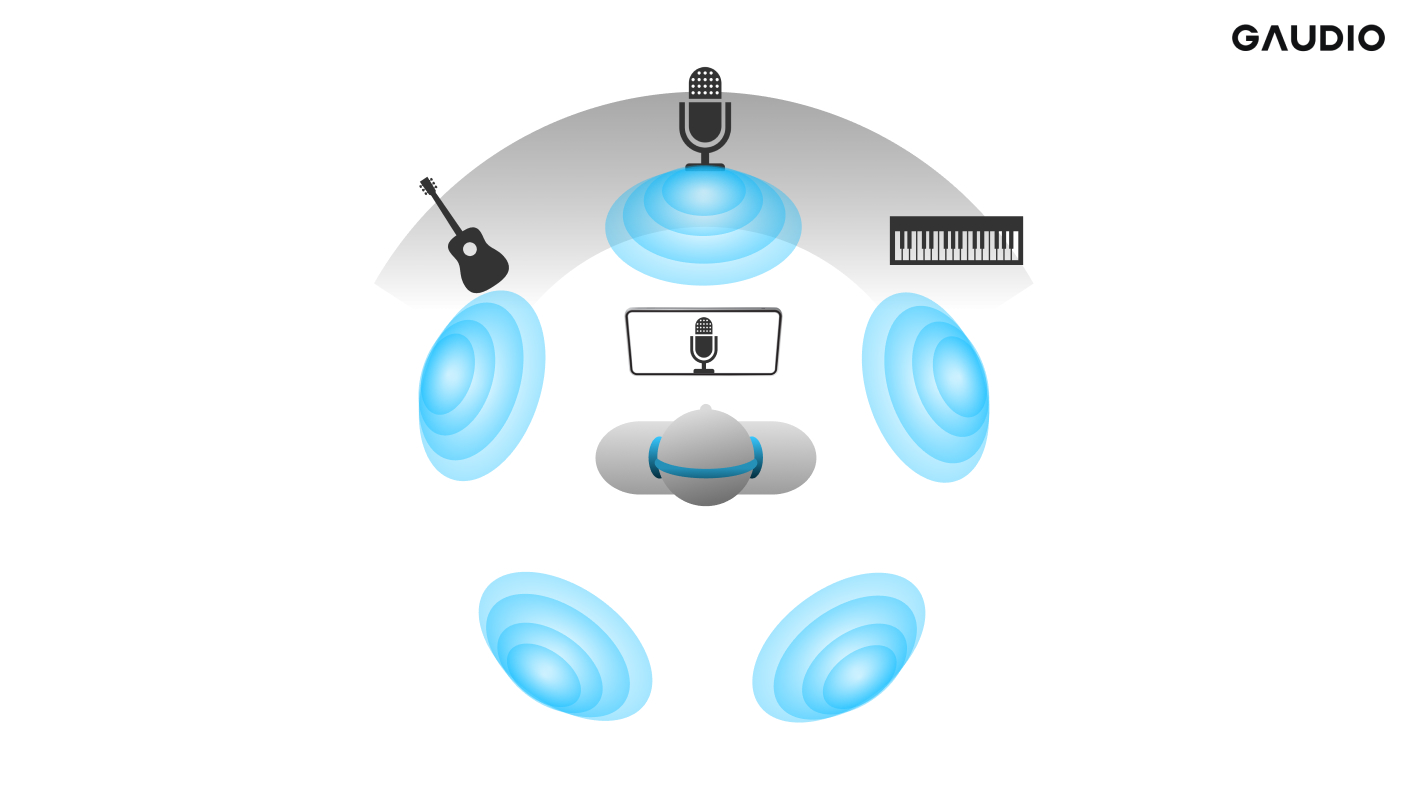

Most sound sources are typically located in front of the listener. When you listen to a song and feel that a frontal stage is well-created while still being able to sense the individual positions of the instruments, it will feel like you're actually listening to the sound in a physical space, rather than through earphones.

Figure 2. Aha, if music creates a sound image like this, it's good Spatial Audio!

Jayden : I think the ultimate goal of Spatial Audio is to reproduce the sound we hear in reality through earphones. Observing how sound is perceived in real spaces and remembering those experiences can help you in making choices.

If you have a quiet space where you usually enjoy music, trying to listen to the sound there would also be a good experience. Moreover, focusing on how sounds are perceived in everyday life can be helpful too. People actually hear many sounds in daily life, but we tend to focus more on processing the information rather than the auditory stimuli. Consciously having thoughts or experiences like ‘this is how the sound is perceived in this situation’ more frequently will help you encounter good Spatial Audio.

3. Please recommend some songs that are good for enjoying Spatial Audio.

James : Ironically, older recordings often show good effects when Spatial Audio solutions are applied. (Maybe it's because they were recorded with speaker playback in mind? Haha.)

When listening to albums like the Oscar Peterson Trio's "Night Train" or "We Get Requests," you can feel that the frontal stage space is slightly more present with Spatial Audio applied, and the instruments are naturally arranged.

Jayden : It's too difficult to recommend specific songs (laughs), but I think quiet music composed of a few instruments, like a guitar or vocals, will help you understand Spatial Audio better.

Bright : Lately, I've been into the Apple Music Live: Billie Eilish album.The cheers from the audience on both sides and behind you, as well as the unique details of the concert hall, create a powerful energy without getting muddled. Live albums are indeed great for experiencing Spatial Audio. Generally, movie soundtracks with strong audio imagery also give you the emotion of watching the movie again in a theater.

Here, we've compiled songs that are great to listen to with Spatial Audio. Turn on the Spatial Audio feature on your device and give them a listen!

If you're curious about how songs with Gaudio Lab's Spatial Audio technology sound, check out this playlist as well.

4. Lastly, is there a shortcut to becoming a golden ear?

James : I think it might be helpful to compare familiar songs using various devices. Each device has slightly different characteristics, so it's good to compare the same sound source while listening. Naturally, you can more easily determine how certain aspects have changed with familiar sound sources.

However, regardless of the process, building your own database is necessary, so it will inevitably take a considerable amount of time! :)

Bright : At least once, just buy a pair of good earphones or headphones! When you start listening with good earphones, you'll suddenly hear sounds that you couldn't before, and you'll find yourself wondering, "Oh? Is this what the drum sounded like?" You'll gradually become a golden ear. I think you're no longer an ordinary person once you start discovering new elements in the music you love and revisiting old songs.

Jayden : I think it's important to know what sounds you like and what sounds you don't like. First, understand how your ears perceive sound and try to figure out why certain sounds are uncomfortable or burdensome. Because everyone's ears are different in shape and the way our brains have been trained from birth until now, the evaluation of sound is inevitably subjective. Whether you're a golden ear or not depends on how confident you are in your own judgment. Listening a lot, consciously focusing on receiving sound stimuli, and gradually gaining confidence in your evaluation is the shortcut to becoming a golden ear. (laughs)

Did you find this helpful? Gaudio Lab is the pioneering Spatial Audio technology company that has adopted its technology to the only audio standard defining Spatial Audio, ISO/IEC MPEG-H Audio, and utilizes GSA (Gaudio Spatial Audio) for devices and content creation such as live streaming.

If you want to learn more about GSA, created by Gaudio Lab's golden ears, click the button below and check it out!