Android Spatial Audio support

Android Spatial Audio support, so… what is it?

(2023. 03. 15)

In April 2022, rumors were circulating about Android Spatial Audio support, and in January 2023, it was officially introduced through a Google Pixel update. Currently, not all Android smartphones can experience it, and only some Pixel smartphones and Pixel Buds Pro can connect and experience Android Spatial Audio (as of the publication date of March 15, 2023). However, it is expected that the number of supported devices will gradually increase in the future.

If you’re considering implementing Android Spatial Audio, here’s a quick overview of what’s changed and what you’ll need to take into consideration.

Figure 1. Supported Spatial Audio when Google Pixel smartphones and Pixel Buds Pro are connected.

Spatial Audio, a giant concert hall in your tiny earbuds

Before introducing Android Spatial Audio, let us briefly explain spatial audio technology. Typically, to experience spatial sound, you need a speaker environment of 5.1 channels or more. In addition, you require a dedicated space to place each speaker, and there are many cumbersome tasks, such as setting up an amplifier. As a result, it is challenging to enjoy spatial sound while commuting or exercising outdoors.

Spatial Audio technology overcomes these physical limitations and reproduces immersive sound, as if you are in a real space, even in an earphone (headphone) environment. It’s like having a 7.1.4 channel-equipped listening room right in your ears. Apple’s Apple Spatial Audio, which was applied to AirPods Pro, and some flagship smartphones such as Samsung Galaxy, allow you to experience spatial audio technology.

Figure 2. Apple Spatial Audio and Samsung Galaxy’s 360 Audio.

To see what has changed with Android Spatial Audio support, it’s recommended to have an understanding of how spatial audio has been implemented up to this point.

The current spatial audio available on the market produces sound through earbuds or headphones that are supported by smartphone manufacturers and directly implemented and run on smartphones. This process of reproducing stereo audio, such as MP3, into spatial audio is typically referred to as a renderer, and smartphone manufacturers implement it through their own development or third-party licensing. From a different perspective, it can be observed that there is no standardized method of spatial audio used by manufacturers, resulting in fragmentation.

In addition, head-tracking implementation is necessary to give the feeling that the sound is coming into the space and reflecting the movement of the head. If, for example, you briefly turn your head to the right in a real concert hall and the singer’s voice in front of you follows your head movement, it creates an unrealistic situation. It significantly undermines immersion and makes it impossible to achieve the experience of being in that space. Without reflecting the information about the movement of the head in the sound space, the sound that is heard in front of the eyes continues to be heard along with the movement of the head, which undermines immersion.

On the other hand, head-tracking plays an important role in the externalization effect, making the sound appear to come from outside the head. According to psychoacoustics, people can distinguish the front-back and left-right of the sound more accurately using only two ears that move unconsciously with the movement of the head. By utilizing head-tracking in spatial audio implementation, this becomes possible, maximizing the experience of sound appearing to come from outside the head (as if it were coming from the speakers).

Figure 3. Spatial Audio without head-tracking (left) and Spatial Audio with head-tracking applied (right).

To support head-tracking-based spatial audio, a sensor capable of recognizing head movements is required, and the IMU (Inertial Motion Unit) sensor embedded in TWS is the main component that fulfills this requirement.

The IMU sensor contains a gyroscope, an accelerometer, a magnetometer, and other components that recognize 6-axis or 9-axis head movement information and transmit it to the smartphone through a Bluetooth channel.

The current structure of spatial audio (as shown below) involves processing the Bluetooth communication process twice, resulting in a time delay between the actual head movement and the sound reflecting that movement (known as Motion-to-Sound (M2S) Latency, which will be discussed in detail in the following article (soon to be published). Minimizing this delay is essential to implementing natural spatial audio. (Android also recommends implementing this delay to be less than 150ms.)

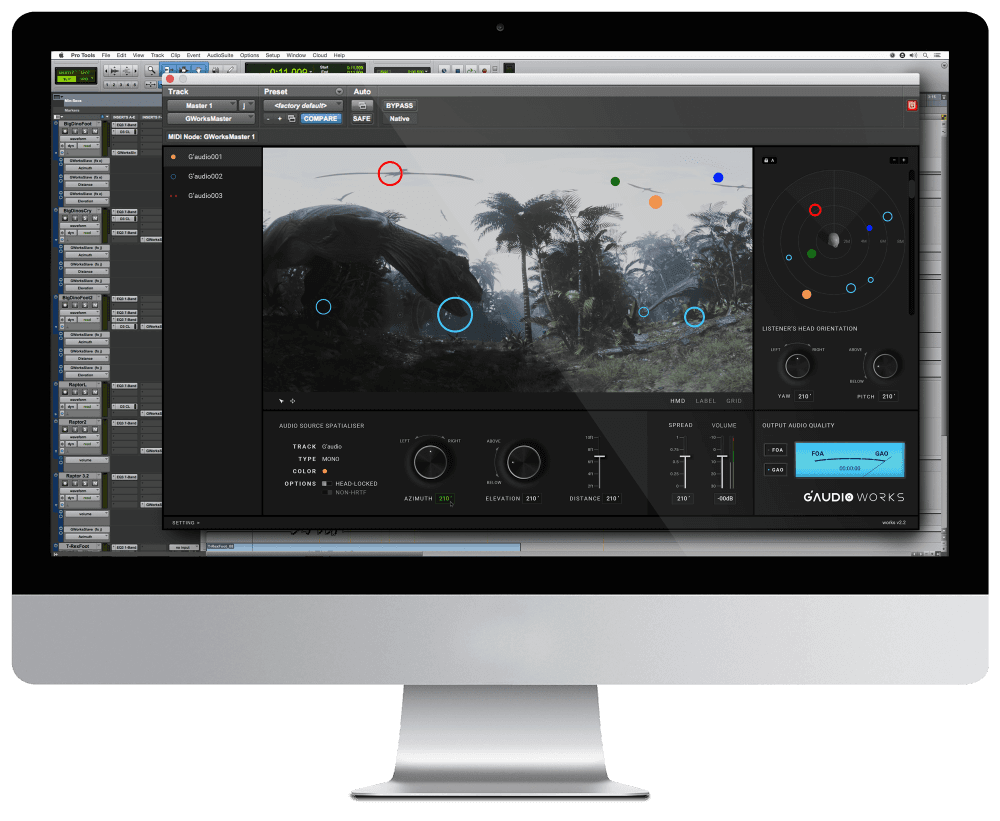

Figure 4. Spatial Audio Operation Structure

Android Spatial Audio, so what has changed?

The main reason for introducing Android Spatial Audio is to standardize the different methods of spatial audio implementation used by different manufacturers. However, while the basic structure is standardized, the actual renderer, which is the key to realizing spatial audio, must be implemented directly by the manufacturers. When smartphone manufacturers implement the renderer, they can easily integrate it into a block called “Spatializer.” This forms the core of Android Spatial Audio.

So, has the Android OS structure changed due to the introduction of this new ‘Spatializer’? No, it has not. All audio functions performed on Android are handled through collaboration among services such as AudioService[1], AudioPolicyService[2], AudioFlingerService[3].

The spatial audio feature has been designed and developed to be executed within this existing audio framework, reducing the development burden. Traditionally, manufacturers customized their audio policies by adding them to AudioPolicyService. Similarly, the spatial audio feature has been designed to be added to the Spatializer within AudioPolicyService, without significantly affecting the existing implementation.

From a user experience standpoint, it is likely that the spatial audio is controlled by the app and outputted to the earbuds via Bluetooth. The interface with the app is handled by the Spatializer Helper within AudioService, while rendering is handled by the SpatializerThread within AudioFlingerService. This confirms that the existing Android structure has been inherited without significant changes.

As an app developer, you may be wondering how to apply spatial audio to your app. However, the widely used player in Android, ExoPlayer, supports easy implementation of spatial audio without understanding such frameworks. Since version 2.18, ExoPlayer automatically selects multi-channel tracks and provides spatial audio control.

In addition, the aforementioned head-tracking implementation requires the use of IMU sensor information. To update the spatial audio renderer based on the sensor information, the Head Tracking HID sensor class has been added to the Sensor Service framework, providing a standardized channel between the Sensor Service and Audio Service. Furthermore, it is recommended that this IMU sensor information strictly follows the HID (Human Interface Devices) protocol.

The HID protocol is a protocol designated by the USB Implementers Forum, originally defined for PS/2 and USB communication between peripheral devices such as keyboards and mice and host devices. As Bluetooth devices have become more widespread, the HID profile for such devices has been defined, expanding the protocol’s support range. It is this protocol that smartphones and earbud devices use to exchange IMU sensor information.

[1] Plays as an interface between your app and the audio framework.

[2] Receives and processes audio control requests, such as volume control, and can apply manufacturers’ audio system policies to them. The service then requests the AudioFlingerService to apply these implemented audio policies to the current audio input and output process.

[3] Responsible for controlling audio inputs and outputs. To do so, it requires control audio hardware from different manufacturers and with different drivers in a unified way. This is achieved by a Hardware Abstraction Layer (HAL), which serves as an interface to the hardware. The audio inputs and outputs controlled here apply the audio policy received from the AudioPolicyService.

Figure 5. Changes in the Android stack with the addition of Android Spatial Audio

Source : Google Android Source (https://source.android.com/docs/core/audio/spatial )

Android Spatial Audio is here, but a lot of work still needs to be done.

Thanks to the easy-to-use panel for applying spatial audio using Android Spatial Audio, Android device manufacturers can now focus on how to implement spatial audio effectively. However, there are certain issues that manufacturers need to consider due to some support limitations. Let’s take a closer look at what needs to be considered.

• Manufacturers must implement the spatializer or renderer themselves. They also need to design their products while taking into account the time delay that can occur due to head tracking support. However, achieving the recommended time delay of 150 milliseconds or less in Android Spatial Audio is quite challenging, as previously explained.

• Processing is only possible on high-performance devices that support Android 13. This means that it cannot be implemented on devices that do not support Android, such as earbuds. It is also possible to implement the renderer directly on earbuds without using the Android Spatial Audio stack, but we will introduce this method again at a later opportunity.

• For manufacturers who require a consistent spatial audio experience across various devices, such as smartphones, tablets, TVs, and laptops, the burden is significantly increased. They need to consider not only smartphones but also other devices like TVs, earbuds, or headphones. Even if the earbuds or headphones are of high quality, if spatial audio is poorly implemented on the smartphone used to connect them, users may experience unintended sound effects.

• At present, spatial audio is only supported for 5.1 channel audio, and stereo audio is not supported. As the ratio of stereo content is much higher than that of 5.1 channel content, the opportunity for users to actually utilize this feature is quite low.

If you are a manufacturer considering implementing Android Spatial Audio, just ask Gaudio Lab. Our solution includes not only optimized libraries for high-quality renderers (spatializers) that manufacturers need to implement themselves, but also incorporates various know-how to minimize time delay.

Gaudio Lab won an innovation award for their Spatial Audio technology at CES 2023. For more detailed information on GSA, click here (also, listen to the GSA demo 🥳).

2023.03.15