CES at a Glance

CES at a Glance

We started off the new year in full swing exhibiting at CES, the largest consumer tech show held in Las Vegas. This year’s show brought nearly 200,000 attendees from across the globe to the city’s infamous strip, along with the industry’s biggest innovators, influencers and trendsetters that set the stage for this year’s emerging technology.

From corrective eye lenses for dogs to a self-driving suitcase, the number of entertaining and out-of-the-box gears and gadgets were as endless as the traffic lines of Ubers and buses shuttling people to and from the show’s nine locations. However, G’Audio spotted numerous exciting trends in the spatial audio field to keep an eye on this year.

Here’s a list of trends and emerging tech in 2018:

Soundbars

Soundbars seem to be taking the spatial audio industry by storm for its boasting capability to produce 3D sounds without using multiple speakers, like 5.1, 7.1, or even 9.1 surround sound. German audio company, Sennheiser, showcased its soundbar prototype for home theater 3D audio. The Ambeo 3D Soundbar includes 13 speakers, nine across the front and two angled on the top. The prototype doesn’t have a release date just yet, but is expected to hit the market by the end of the year. Fraunhoufer’s upHear’s spatial audio microphone processing technology is now able to playback on soundbars, enhancing the immersive audio experience (please note this is solely an algorithm and the company does not produce hardware for soundbars). Other audio companies that showed soundbar technology include Qualcomm, Creative Labs and SotonAudio Labs.

Holographic Displays

Holograms used as visual displays made multiple appearances this year, making us feel like we had stepped into a futuristic 3018 instead of the present 2018. British startup, Kino-mo, had holographic adds floating above Eureka Park, the show’s startup sanctuary (where we were also exhibiting in the AV section). Other holographic tech companies included Merge VR’s Holo Cube that lets users interact with holograms, and Hologruf, which showcased 3D holographic displays.

Augmented Reality

AR has seen a spike in the past few months, where industry bigwigs, such as Microsoft, Amazon, Dell, and even StubHub have invested in the technology’s future promise of popularity among consumers. The G’Audio team checked out some interesting AR booths at Eureka Park that included both hardware and software, such messenger apps.

Virtual Reality

Although VR has experienced many ups and downs this past year, CES proved the industry is still advancing, offering better quality content, new technology and more affordable hardware.

Here are some exciting VR tech updates:

Tobbii Eye Tracking is pretty self-explanatory. The company was integrated into HTC Vive and can immensely improve reaction times in VR. NextVR, a fellow SoCal company, has improved its livestreaming video solution that’s compatible with most HMDs and is working towards incorporating 6DoF into the new resolution. Contact CI Haptic Gloves simulate the sensation of touching by recreating the way in which human hand muscles move.

What’s New in the World of VR Headsets

There were many new HMDs showcased throughout the week. The biggest advancement for HMDs in VR was HTC Vive’s announcement of the HTC Vive Pro and Vive Wireless Adapter is more user-friendly, offering higher-resolution and lighter weight. Kopin Elf Reference Design displayed its super slim reference VR headset that includes 2K OLED. Huawei announced its VR headset, VR 2, which will support an IMAX virtual giant screen experience and will debut in China this January. Pimax released its ultra-wide 8K resolution HMD, as well as iQIYI Intelligence’s QIYU VR II that also supports up to 8K resolution and is able to recognize VR content in various code formats. Additionally, Facebook teamed up with Xiaomi and Qualcomm for the new Oculus Go headset.

Going Beyond Gaming and Cinematic VR

VR is a multifaceted medium that goes beyond the entertainment and gaming sectors, and can be used for a multitude of purposes, including education, healthcare and even training. Many of these companies exhibited at CES this year. In particular, G’Audio enjoyed visiting Looxid Lab, which provides sensors to detect brain waves, and eye tracking to analyze the emotions of users, as well as what direction the user is looking in the VR content. Additionally, an analytics service is offered to advertisers or research companies for marketing purposes. We’ve also seen an increase in VR as a tool for training employees, as seen at a UK startup that specializes in creating industrial training programs developed in Unity.

G’Audio at CES

We announced some exciting news of our own at CES. We’ve launched our livestreaming audio renderer!

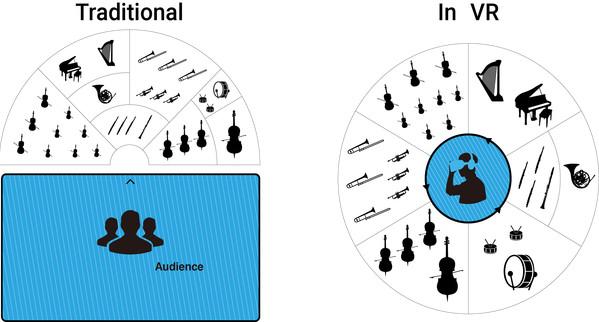

Sol Livestreaming audio solution for 360-degree video transcends the limitations of current livestreaming formats. Now, users can fully experience the sound of live entertainment and sports events without physically being present and as if they have the best seat in the house.

Specifically designed to serve up Ambisonics audio signals, Sol Livestreaming provides an accurate sense of the entire soundscape. Furthermore, we’ve adapted our GA5 format for livestreaming and squeezed B-format Ambisonics into the popular AAC codec. Utilizing this ubiquitous codec allows Sol Livestreaming and its renderer to be easily adopted across multiple platforms, bringing truly immersive audio to content creators and consumers alike.

Overall, CES was an action-packed week full of new and exciting technology, but most importantly, we saw numerous booths in the spatial audio realm, showing the industry is continuing to grow and advance. We can’t wait to see how this year will unfold!